Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

In this Discussion

Guests: Kayla Adams * Sophia Beall * Daisy Bell * Hope Carpenter * Dimitrios Chavouzis * Esha Chekuri * Tucker Craig * Alec Fisher * Abigail Floyd * Thomas Forman * Emily Fuesler * Luke Greenwood * Jose Guaraco * Angelina Gurrola * Chandler Guzman * Max Li * Dede Louis * Caroline Macaulay * Natasha Mandi * Joseph Masters * Madeleine Page * Mahira Raihan * Emily Redler * Samuel Slattery * Lucy Smith * Tim Smith * Danielle Takahashi * Jarman Taylor * Alto Tutar * Savanna Vest * Ariana Wasret * Kristin Wong * Helen Yang * Katherine Yang * Renee Ye * Kris Yuan * Mei Zhang

Week 3: Black Code/Databases/Humans (Code Example)

Let's get into it!

In this thread, we've discussed spreadsheets and whether spreadsheets include code and are programming. Considering much of the digital work happening in histories of slavery is happening in a world of databases and network analysis, and considering the historical relationship between black codes as slave codes and what Lauren Cramer and Alessandra Raengo distinguish as "black coding" or the ore self-conscious hacking of systems and code by black and other racialized subjects, it is worth taking a moment to explore what assumptions we make about what code and race do.

Afro-Louisiana History and Genealogy is a database of over 100,000 enslaved and free Africans and people of African descent transported to (or freed) in Gulf Coast Louisiana between 1718 and 1820 - from the founding of Louisiana by the French and through the first eighteen years of United States governance. The database team was led by Gwendolyn Midlo Hall, author of Africans in Colonial Louisiana: The Development of Afro-Creole Culture in the Eighteenth Century. In Africans in Colonial Louisiana, Hall argued that Africans transported from the African continent to the Gulf Coast founded an Afro-creole culture, one deeply rooted in Senegambian lifeways, lifeways that then significantly influenced the Gulf Coast culture that would develop over time. Hall wrote an African-centered diasporic history at a time when such connections were seen as illegitimate, pandering, false, and unseemly (at best).

How does a team of researchers code (or encode) enslaved women, children, and men in a context where their existence was seen a problem? What kind of critical gaze did the team apply to the analysis and also salvage some of the humanity of the enslaved?

(For reference, and an entirely different field of study, the archaelogists from Howard University unearthing the enslaved interred at what is now New York's African Burial Ground National Monument faced similar stigma and critical conundrum. See Alondra Nelson's Social Life of DNA for more)

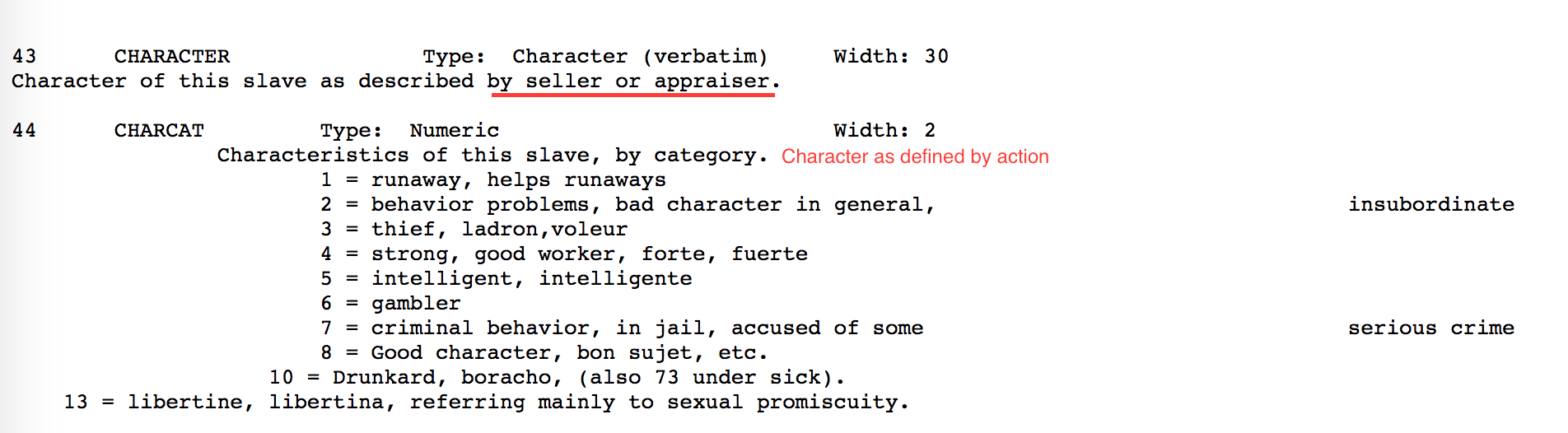

In response, Hall and her team coded enslaved persons like so for use in SPSS databases --

Slave Database Codes:

Click here

Free Database Codes:

These spreadsheets were first released on CD-ROM in 2000. They were later compiled and re-released by the Center for the Public Domain, and ibiblio.org so they could be shared for free and available to the public: https://www.ibiblio.org/laslave/about.php and https://www.ibiblio.org/laslave/introduction.php.

Another example of code, slavery and the database is the way the team around the Trans-Atlantic Slave Trade Database encoded their data. Containing information on over 25,000 slave ship voyages, the slave trade database also makes its dataset freely available here.

Trans-Atlantic Slave Trade Database

While an invaluable tool, the database and the database impulse has also been critiqued for the ways it risks re-commodifying enslaved Africans--just this time in spreadsheets and database files.

And although spreadsheets and formulas can be debated as code or not code, programming or not programming, the practice of coding relies on encoding features that are kin in each of these instances--and area also descendants of codes used in the “unmaking” of black humanity that was the Middle Passage and that Atlantic Project.

A final example -- W. Caleb McDaniel created the @Every3Minutes twitter bot after reflecting on Herbert Gutman’s argument that every three minutes an enslaved man, woman, or child was sold in the domestic slave trade during the antebellum period in the United States:

“about two million slaves (men, women, and children) were sold in local, interstate, and interregional markets between 1820 and 1860, and that of this number perhaps as many as 260,000 were married men and women and another 186,000 were children under the age of thirteen. If we assume that slave sales did not occur on Sundays and holidays and that such selling went on for ten hours on working days, a slave was sold on average every 3.6 minutes between 1820 and 1860.”

McDaniel wrote a Python script for the bot, then discovered that “variables forced me to attend to “an enslaved person” as someone bearing multiple relationships to other persons. The code also soon involved me, unwittingly, in a troubling objectification of the human individuals whose stories I was attempting to conjure.” Read his blog post on the experience here.

Link to his script here.

Gwendolyn Midlo Hall’s code book is a direct line to having to choose variables to direct a Twitter bot not to re-objectify enslaved people.

Some questions to consider:

What is the responsibility of coders to attending to coding or encoding of historical subjects already vulnerable to commodification?

How did McDaniel and Hall address the question of race, racialization, and commodification in their code?

If you had an example--like this one--could you turn the code against itself and without changing any of the given data work against the dehumanization impulse of the archive?

Since even color is a social construction, how useful are these codes anyway?

Comments

2. How did McDaniel and Hall address the question of race, racialization, and commodification in their code?

McDaniel's code and his subsequent blog post on @Every3Minutes make clear the difficulty of attending to others within an object-oriented language. At the same time that the code is itself an intervention, the code itself remains a system that has its own limitations.

I'm struck by this passage from McDaniel's github:

I understand using a passive form as an attempt at a more humanizing statement, but the passive form evades attributing the verb to a subject: for instance, with "A slave was just sold." Yes, but sold by whom?

One text that deals well with the issue of verbs in a media context -- despite not being about code -- is Cornelia Vismann's "Cultural Techniques and Sovereignty." I have to run out the door, but I wanted to mention it because of how it addresses the Greek "medium voice" whereby with bathing, for instance, the bather is carried by the water. I bring this up because it might be fruitful to think differently about attribution, outside of the passive-active construction. This, despite the difficulties inherent in Python!

Wow I get something new here

One of the things that grabs me right away about Gwendolyn Midlo Hall’s code book examples is the way that the codes register traces of how a community of researchers put themselves into the project, in such a way that their names are code indexes that are part of the database structure.

For example in "CODES FOR SLAVE DATABASE", the Notary is a 20-character text field -- it could be anyone, and that is stored in as data:

...but the Coder is a 2-digit ID, a lookup for a fixed list of the names of (some of the?) project participants. (

07and08are missing.)By comparison and contrast, in "LOUISIANA FREE DATABASE CODESHEET" some members of a community of key people enabling the research are also written directly into the code by name, and they are also recorded as a short-list of 2-digit numbers. However, in this case, they are DEPOTS -- or archons, keepers of the "Depository in which this document is housed." Gwendolyn Hall is CODER

01, and she is also DEPOT55:This is not to say that Hall is made subject or instrumentalized by the database design or by the data encoding in the same ways as the slave records. However it is interesting to think about this as a point of engagement, possibly even a trace of bearing witness: what does one do in the process of self-reflexively encoding oneself as a CODER of other subjects, both for good and for ill?

@jeremydouglass

>

So I really love this because hopefully we will discuss soon the ways that revealing yourself as the author of the code, project, database, etc. is actually an important part of thinking about ethics, accountability, and ways to humanize coding. Hopefully Safiya will also talk some about Google as an opaque, in some ways, operating system that naturalizes relations between users and programmers, but also, through its search function, naturalizes difference and precarity by how different races, ethnicities, genders, topics are coded in algorithms.

I mean...what if the programmers behind programming the Google image searches so that faces of people of African descent get tagged as gorillas were the public face of that dust up? As opposed to users never knowing (or programmers being outed as the person behind the scenes who made a mistake). What if accountability worked in our projects and in our project design from the very beginning?

>

I also think it is important to think about personal accountability and writing code/software development, and bringing forth the human subject behind the algorithm, but when it comes to corporations and the Google search algorithm, accountability needs to be addressed in terms of the institutional and historical nature of the way software is developed. The idea that an individual programmer might be outed for a pervasive problem like racism encoded into certain computational systems, might seem to absolve corporate responsibility. When I think back to a few years ago when facial recognition algorithms in Hewlett Packard and Flickr systems were first revealed to not detect faces with darker skin tones, one of the ‘solutions’ that was mooted was that Silicon Valley just needed to hire more black programmers, which suggests that an individual writing code for Google could single-handedly spot the error in the code, rather than such errors being generated pervasively and structurally. According to that solution, it might follow that, if racial discrimination was found in a system, then it could also be attributed back to the black programmer, who should have somehow spotted it. I’m not a professional software engineer nor have I ever worked for a large corporation, but I understand that code is rarely written from scratch, it’s built on and outwards from other code, and machine learning databases are bolted together from previous versions.

I think it’s first about separating corporate accountability and the institutional production of software, from smaller scales of code production. Then thinking about how when writing code/apps/software, one can easily evade accountability, for example, in the way that open source code libraries are widely shared online but there is rarely a sense of the user taking personal ethical accountability for the way that they extend and implement that code. An example could be the OpenCV facial recognition library that is widely used, but how many individual programmers think beyond it being a free tool, and see the necessity of thinking about the ethical implications of how they use it? To what extent do developers take the cultural issues reported on in the media on a global scale, and understand the need to take personal responsibility in their own code writing, or in their small business applications? I would love to hear about tactics for revealing oneself as the author in the code, or pointing to ethical accountability, that might also translate as a code/libraries are forked and applied.

I want to think a bit about bearing witness and discomfort.

@jmjafrx asks: “What if the programmers behind programming the Google image searches so that faces of people of African descent get tagged as gorillas were the public face of that dust up? . . . What if accountability worked in our projects and in our project design from the very beginning?”

In contrast to @CatherineGriffiths, I think personal accountability would be more likely to happen if Silicon Valley hired more black programmers. Catherine wonders whether black programmers would be burdened with debugging racism from code, or scapegoated for not catching it. Corporations would be incentivized to engage such a transactions, eschewing responsibility for the baked-in-ness of “bolted together” racist code that might be so corrupt, there’s no modular way to excise it.

Fair enough.

Hiring more black programmers wouldn’t unbolt the code. But it might create a work environment that resists tacit acceptance of racism and/or white supremacy. It might make the programmers who wrote the code tagging people of African descent as gorillas uncomfortable that people might catch them, out them. They might decide it's not worth the risk of feeling ashamed, or getting a negative performance review.

Black programmers are less than 5% of SV employees. More precisely: "In most cases, black people represent less than 2% of the total. For example, Google and Yahoo disclosed that their workforces were 1% black last year, while Twitter was close to 0%. Earlier this month [July 2016], Facebook said only 2% of its US workforce was black."

The feminist games community has been very clear that “toxic geek masculinity,” as Anastasia Salter and Bridget Blodgett write in their new book of that title, is enabled by overtly sexist game dev work environments.

The 2012 book “Team Geek,” authored by two Google managers of large engineering teams, pivots on how to build cultures of respect and trust at SV corporations like Google. Respect and trust in a monoculture looks different--and enables a different standard of conduct--than that of a racially and culturally diverse culture.

I’m struck by @jeremydouglass' notion that Margaret Hall encodes herself into the database as CODER and DEPOT as, potentially, “an act of witness.” I like this gloss because it glimpses a gesture of parity. Hall commits to balance, if nothing else: a gesture that can’t act as any kind of counterweight to the slaves’ lives parsed and encoded, but at least recognizes that while balance is impossible, transparency and respect are not.

We have developed the BCL (Beloved Community License) as a framework for system change, accountability and ethics in technology.

https://douglass.io/the-bcl

https://www.gitsoul.com/BCL/BCL/src/master/README.md

The BCL's starting point is to position the Coder/Code/User as "Abolitionist".

"The BCL is a Non Violent Software License that is created out of Love, Peace, and Purpose.

The BCL Software License is based on an understanding and recognition of: Martin Luther King’s “Beloved Community”, “Gandhian Nonviolence”, and a Spiritual Activism that pursues a future of Freedom through ending the struggle of the oppressed Multitudes of the World.

As Technologist and Spiritual Activist, we are building a modern future of: Freedom, Spirituality, and Fulfillment for all. In this new modern World, Violence, Poverty, Racism, Sexism, Prisons, and Militarism cannot survive.

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the “Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

Recognition and Possibilities

Software and Hardware issued under the BCL Software License will be used to improve the lives of the Multitudes of the World that are oppressed and in struggle.

Software and Hardware issued under the BCL License will be used to foster the development of equitable and inclusive political and economic spaces.

Whenever it is feasible the Software and Hardware issued under the BCL License shall be used to enable the enfranchisement of the incarcerated into the daily activities of our communities.

By using Software and Hardware Issued under the BCL License you recognize the spiritual divinity of every person with special attention to the hungry, the homeless and the oppressed.

By using Software and Hardware Issued under the BCL License you recognize the sanctity of the earth (by reducing extraction without adequate compensation, restoration and regeneration).

By using Software and Hardware Issued under the BCL License you recognize the humanity of the incarcerated.

By using Software and Hardware Issued under the BCL License you recognize that reparations and atonement are a part of a needed healing process for the oppressed Multitude.

Usage Restrictions

Software or Hardware issued under the BCL License cannot be used for any violent purpose.

Software or Hardware issued under the BCL License cannot be used for surveillance of any kind.

Software or Hardware issued under the BCL License cannot be used for war.

Software or Hardware issued under the BCL License cannot be used to support Military activities.

Software or Hardware issued under the BCL cannot be used to inflict violence upon the earth.

Software or Hardware issued under the BCL License cannot be be used by institutions of incarceration.

Software or Hardware issued under the BCL License cannot be used to support the activities of Institutions of Incarceration.'

A large majority of the stack/code/design/interfaces have been and continue to be developed inside of and in support of violent and oppressive neo colonialism.

Code that does not seek to bring about new equitable political and economic space for the oppressed is code that is in service to the status quo.

All code is "Political".

"Successful" code that exist under current Open Source License schemes is often times used by oppressive states and their ideologues to further inflict the violence and oppression that is part and parcel to Racial Capitalism.

@CatherineGriffiths writes:

These are very important questions and they have global implications. Global South and francophone African and Caribbean digital humanities scholars recently discussed the ways "open access" may actually re-center and privilege Western conceptions of knowledge and science because open access options are still limited to the Global North (especially England and the U.S.) and open access isn't being interrogated for these very issues Catherine. So if open access (or adding black programmers) becomes the solution, how do we get to the underlying systemic issues? And that applies to race but all manners of difference.

(In case anyone wants to read it a summary of the conversation - Andy Nobes, “Must We Decolonise Open Access? Perspectives from Francophone Africa – Journalologik,” accessed January 3, 2018. x)

Now...solutions though!

Throw some examples out of how we might in large and small ways reimagine authorship, encode social justice in our programming!

@KIBerens writes:

This is also a really important point! Who is doing the programming actually does matter.

I mean, but can we also just appreciate for a moment how much care Hall and her team put into the complexities of African identity--at a time when slaves weren't even seen in the historiography to have complex histories or pasts?? As coders, the background knowledge they needed to have about African history and the African continent was immense. See:

This kind of coding gesturing to the diversity of languages in the archive, at the time period in Louisiana, and among Africans themselves!

So when black feminist and queer of color scholars talk about the many arrangements race, sex, and gender create over time and place, Hall and her team are, in a sense, encoding those arrangements with impressive rigor (at least to me).

What do others think?

I'm interested in those critiques of the dehumanizing action of representing enslaved people in databases when producing research materials. It strikes me as vastly different from say a slave trader creating lists of the humans they are about to trade. Context seems key here.

But I did find something that struck my humanity in these codes, and that was the index of codes for illnesses. Seeing this list of numbers for representing the vast numbers of ailments to be cataloged, hit me with a force that seemed the opposite of a dehumanizing impact. So I think it's important to see the meaning and impact of these codes and encoding as one with no less deterministic outcomes than any other form of symbolic means of communication.

@KIBerens said: " It might make the programmers who wrote the code tagging people of African descent as gorillas uncomfortable that people might catch them, out them. They might decide it's not worth the risk of feeling ashamed, or getting a negative performance review."

I know, I am a proud African but my objective/critical mind tells me more of the programmers' worldview and perception about African. Their thought is inspired from the Darwinian evolutionary theory perspective for it is obvious, I am not gorilla and again darwinism is widely believed in the Western world. Since we have been staying in Africa, we have never seen a gorilla that grew into human being even in the Congo forest or Kenya forest.

Reading through the Hall's code, I'm a bit confused by

This comment above is situated after the list of illnesses, and before the classification of mothers. It's a vanishing point, where Hall's methodical classifications stop short of attempting to parse heterogeneity at the risk of misclassification. The gesture validates Hall's confidence in the parameters of the other classifications. (I was struck by the long list of jobs and skills: it painted a vivid picture of slave expertise, which was itself classed in four gradations.)

What is this field Hall calls "extremely important" but deems its details "too long and varied to be coded"?

Might this field mark the place where slave self-representation might have been?

I say this because earlier in the code, a slave's character is defined by the owner's description, and then by the slave's encoded actions. There was real effort to identify ranges of slaves' moral, ethical character.

Hall's comment on the "extremely important field" marks the place where "character" can't be represented in discrete actions or mercantile descriptions. And yet rather than excise it, she leaves it in as a marker of what can't be legible.

(This reminds me of craft #137 in the list: "writing, sign name."

What do others make of what this "extremely important field" might be?

I join you, @jmjafrx, in appreciating the level of care Hall's classifications manifest.

I think this is a great example of the importance of context. In this discussion and in the context of the Louisiana Slave Database, this list of ailments is moving, but it is data extracted from records that list potential problems in the commodified bodies of the slaves. From the project description, for any who haven't read it yet:

Given that databases today are still the way for doing inventory control, we could ask about if the technologies for search and retrieval, the requirements of databases fields and tables, simply re-inscribe the logics of these past documents. Beyond recovery (a valuable task in itself, of course), does the interface provide any critical intervention that recontextualizes these documents? I would love, for example, to see every document transcribed and shared for text analysis, with critical engagements from scholars provided as apparatus to situate these records. There's a troubling array of databases holding information about people these days. I think of Facebook or even the NSA, tracking our engagements in far more detail than ever thought possible, learning and selling aspects of our behavior that we're not even aware of ourselves. How much does the structure of the tool (I'm thinking here, too, of how the structure and demographics of corporations like Google enable racist and sexist code) affect the meaning and possible outcomes?

This year, I'm serving as one of the co-conveners of a digital humanities reading group at my institution, and one of the questions that came up early in our discussions was whether or not we as digital humanists really have to learn how to (read) code. For most of us, the hesitance came from having one more thing to add to our tasks that we have to do individually in isolation, which is the kind of work the academy tends to value most highly.

So I think one solution might be to create more opportunities for people who are well-versed in code to collaborate with people who are well-versed in radical histories, philosophies, and pedagogies (not saying that these capacities are inherently mutually exclusive). In addition to creating the opportunities for collaboration around issues of code, we also need to keep working to change how collaborative work is valued in the academy.

It is my view that coders must be grounded in radical histories and social change theory.

For me one of the issues with coders is that by and large most have little to no understanding of the history of the oppressive systems that they are reinforcing by writing coding. Most have no understanding of radical Histories. Most have been decoupled from any attachment to a Commons. Most have no understanding of the externalities that are associated with the code that they write.

This brings up some interesting observations for me. One is that we are possibly in a phase that has Western Nations States with their historical propensity for violence against brown and black bodies being replaced by a kind of Tech Nation State constellation that has as it primary goal hyper neo liberalism that is driven by the surveillance capitalism.

It is also interesting to me that the Slave Database can be seen as a continuum that is linked to current databases of prisoners in the United States as well as U.S. intelligence database and applications that have been used to track revolutionary Brown and Black bodies. Here I questions the coders and the product managers and the tech companies that are creating these oppressive systems.

One of the Goals of Douglass OS and the BCL (https://douglass.io/) is to foster System CHANGE that is centered on ending the struggle of the oppressed Multitudes of the World.

Douglass is an attempt to bring some dreams of emancipation and liberation into reality.

I like this idea of thinking about ways to provide a critical intervention in the tools of retrieving data. But are we suggesting that numerical representation of data is in itself dehumanizing if those numbers are drawn from other documents of control or if those numbers are not attended by their sources?

I worry a bit that the perfect is the enemy of the good here and that asking scholars to document in this way might prevent statistics-based researchers from performing the very calculations that spreadsheets enable, though the idea of documentation in the data appeals to my humanistic scholarship and to my humanity. Still, I'd be interested in examples of critical and creative uses of spreadsheets/databases in this way.

The Robert Frederick Smith Explore Your Family History Center comes to mind, particularly the Community Curation Platform, though that is not a site for statistical analysis.

But getting back to the topic of documentation, I think it's worth noting the amount of documentation these sites provide outside of the spreadsheet/database itself. Consider for example the website for the Transatlantic Slave Trade Database: Slave Voyages. In addition to the data, that website offers an extensive array of paratexts, including a section entitled Assessing the Slave Trade, Resources, and Educational Materials. Not quite the document for each datum, but at least quite a bit of context.

From a CCS point of view, if we think about spreadsheets, for example, as programming environments, we might think about the ways in which these can, and do, already provide some of these interventions in themselves. Many research-based spreadsheets already represent an interventions in data.

Take for example, the 2010 Estimates Excel spreadsheet (which might be a good document to download if you don't have access to SPSS). On the one hand, it offers an attempt to create estimates of the slave trade that account for those unaccounted for.

This estimates spreadsheet is in itself an intervention, and it is attended by images and other documentation of the slave trade that seek to situate this data. Also, in the spreadsheet, there is an index with more contextualization, although again not at the level @mwidner requests.

But following @mwidner's suggestion, in the spirit of our critical and creative interventions: What more would we ask of these databases and spreadsheets? How can they be augmented to incorporate meaningful interventions? Also, as we gather to read code, I ask what kinds of interventions we can provide by examining the spreadsheets themselves. How do we read individual sections of specific spreadsheet critically?

In that spirit, I have also uploaded the Estimates spreadsheet to Google Sheets for our collective annotation and exploration. But we should of course pursue these questions further here.

This is in part because the code they are being taught to write is designed to isolate them from those larger systems. I think @Kir's opening comment on McDaniel's challenges with object-oriented language reflect this. They don't work on systems, they work on components which then organize into systems that reflect the values that @fredhampton identifies. While I certainly think we need to address the coders and projects managers that develop these applications, we also need to realize that they are also subject to that larger organizing system (hence the need for radical histories). What it interesting is how isolated each section is from the other especially in large scale applications and corporate infrastructures.

Modern software design further extends these issues. @CatherineGriffiths notes that most developers didn't think about OpenCV beyond its use for their application. That is only the tip of a very large iceberg when we begin to consider the role of package library managers for languages like Ruby, Python, or Node.js. A coder may write single package or a part of a package and, by design, escape responsibility for the systems that use that package.

One of the interesting things about the BCL is that it is designed to require just that sort of attention. It gives programmers a way to take responsibility for the code they create in a way that works against current development models. It's a refreshing change and a needed one.

For me this becomes an interesting place where the vocabulary of code isn't enough especially in the issues @fredhampton points out. That here is where other aspects like user design, and front end/back end .

As @ggimse points out coders are but part of the design and can escape responsibility, as well placating measures can be enacted in how the code is shown that do not actually change algorithms but do change what the user sees, ie: In Google the actually learning algorithm that marked people as gorillas was not changed , it just stopped surfacing that result. And Google with it's arts app created a user design that people willingly submit their faces to to increase it's learning set .

While code does the work , the UX , the context completely changed the relationship and perception folks had to the code of making facial recognition better.

Historically ( and personally) I am interested in how the presentation of code changes things. I originate my big data code/UX framework around the Treaty of Tordesillas of 1494. The first entry of a binary code based mode of thought that separated the world into 1's and 0's ( belonging to Portugal or Seville) . How that first piece of code of demarcating a global unknown I think has been repeated over and over . The code itself is a marker but presentation,ceremony etc and how they present code.

So the spreadsheets to me trigger a question of " So here is a piece of database for how enslavers would code a slave to themselves, what would they tell others?"

How might that code be presented on a runaway slave notice, or a clinical study and why?

Yes. This also makes me think about coders as artists (and to address the question around the coders responsibility). I'm thinking about both arts-based and design-based approaches to advocating for transparency in technical systems, namely those systems that power the codes that articulate social justice movements. Critical technologists, artists, researchers, and activists continue to wrestle with how to make visible systems that are often hidden behind seamless user interfaces and complex algorithms. I'm working on the idea of transdata storytelling, which I describe as an approach/method to emphasize the role of visual and non-visual code to tell stories that also make visible technical systems across multiple platforms.

This thread is helping me think through so much!

@KIBerens I downloaded the DB and took a look at the values stored in COMMENTS. Of the 100,666 records in the DB; 46,103 have a non-empty COMMENTS field. Some offer biographical detail that does not fit into the existing categories, but many others concern the provenance of the data. For example, several COMMENTS describe difficulty reading the source material or make note of missing information. In this sense, the COMMENTS illuminate the process of interpretation and translation between the primary source documents and the database. (Perhaps this metadata provides a starting point for @mwidner's question about the original texts?)

The COMMENTS gave me a way into @Sydette's question about different interfaces for the same data. Consolidating 101 years of records into a single file feels like a significant transformation, even if most of the data is transcribed faithfully from the primary sources. As a speculative exercise, I am trying to think about what W. E. B. DuBois might have done with these documents? (And now I'm imagining Du Bois firing up RStudio...)

However, the COMMENTS also implicitly reveal relationships among individual people. As Hall notes in her introduction to the CD-ROM notes, these data were created as a by-product of economic transactions in Louisiana-- weddings and dowry, deaths and inheritance, criminal cases, or ships arriving to port. As a result, some COMMENTS recur, verbatim, on hundreds of records because the people they represent were part of a single transaction. As a reader, I found the repetition of the COMMENTS column--row upon row with the same notes--especially arresting.

For those of you who are deeper into the relevant literature, how was Hall's work received by other scholars at the time? Was database production stigmatized at all following the 1970s debates about quantitative history? (I'm thinking about Fogel and Engerman's Time on the Cross (1974), Gutman's Slavery and the Numbers Game (1975), etc.) It strikes me that Hall's 1992 book was based on a variety of sources and analytic techniques. Statistical analysis was just one tool in her belt, despite having created such a unique resource for quantitative research.

SO much here--Yes!!!!

Just want to think about this a little bit @KIBerens

...because once the spreadsheet is exported, this is the field that is the most interesting for the qualitative or humanities (arguably) researcher.

Screenshot

This is where the person that was unmade into a captive and was then remade into data because a human to me.

Back soon....

@jmjafrx Wow: for the field data about freedom, and your sentence after it.

@driscoll:

"[S]everal COMMENTS describe difficulty reading the source material or make note of missing information. In this sense, the COMMENTS illuminate the process of interpretation and translation between the primary source documents and the database. (Perhaps this metadata provides a starting point for @mwidner's question about the original texts?)" So much here for me to think about and explore, including @sydette's observation about interfaces. Thanks.

@fredhampton wrote:

The idea of a Commons (and its obverse, the alienated individual) makes me think, also, of the methodological "individualism" (at the level of data) promoted by such things as object-oriented programming. To conceive of a program as objects calling upon functions to act upon themselves is an object-centered view of the world — different from functions being written to accept certain kinds of objects in particular.

@jmjafrx, I don't understand your Black Code Studies. Do you guys mean, Blacks do not have any order history apart from Slavery, Sexuality and gender? I perceive you are not dealing with all inclusive Black histories in regard to coding.

Happy to clarify. "Black" as a racial formation does not exist apart from sexuality and gender. Certainly what is "Black" does change form across time and space--when it does, it does not do so apart from sexuality and gender. Arguably "Black" does not exist apart from slavery, colonialism, imperialism, globalization. The second is arguably because I argue that this includes African history and contemporary life, but not all Africanists would argue the impact of slavery, colonialism, and imperialism is global the same despite the impact of slavery, colonialism, imperialism and globalization has had on the continent.

I find this to be expressly and humanely inclusive.

@Sydette writes:

spit out my coffee

Dammit Sydette. Okay and also yes. I normally begin with the 1685 Code Noir, but this, this goes even deeper...thank you.

@ggimse

What would a programming language look like that made this impossible? Or would that require another code or script to be added each time?

I guess I am asking, is there a hack for this disconnect at the code level?

Not to return to the comment field in the Hall database example, but in some ways it seems to function that way. It is the field that captures the impossible data the code can't formulate on its own (that enslaved might not be property but could be humans also and act as sentient beings do), but it also gestures across the fields in interesting ways to fathers, wives, siblings, or co-insurrectionists elsewhere in the record.

There are also related value fields in databases that populate automatically, but this may be a different matter....

@driscoll writes

YES. So Hall's database was released on CD-Rom and was one of the first of its kind and received well, but perhaps without the fanfare it deserved. Open-source libraries like ibiblio existed but didn't have the kind of cache they might have today. But the database was well funded and well-supported at the time. It is still available (in CD-Rom) form in libraries around the U.S. and in France (it may be in Senegal), but it never had the institutional support to have a website created out of it in the same way the Trans-Atlantic Slave Trade Database was (sponsored by Harvard early on and later Emory).

Hall's book was extremely well received in some circles and critiqued vigorously in others for her underlying premise that Africans retained a sense of their own ethnicity and worldview once transported across the Atlantic. This was a huge historiographical debate at the time and still is in some circles although it is now more widely accepted that Africans of various ethnicities and depending on circumstances of the trade in particular regions did retain their own lifeways. Although her research was imputed to be false because of resistance to that argument, her research still holds. And even today, the slave trade database records of the slave trade to Louisiana are the same as they ones Hall uncovered.

Some of this is also gendered as she was the one woman (and older) working in a field of statistical analysis that was mainly closed to women and she was working on African diasporic life....quite a complex figure and project.

@jmjafrx Thanks so much for bringing that history to the table. Learning about the dramas behind the data reveals some powerful continuities across generations of scholars. I am looking forward to spending more time exploring Hall's work this summer.

It's also fascinating to see how the database itself is continuously transformed and translated to accommodate new technical standards. The zip file that I downloaded a few days ago included a half dozen different formats--from raw SQL to CSV to Microsoft Access. (Not to mention that I spent a few minutes downloading a database that would have once required a heavy envelope and book of stamps to transmit.)

@driscoll

I want to return to so much in this thread, but you bring up something here worth highlighting -- that as much as Black Code Studies is about the ways poc operate online (including manipulating code) in fugitive and maroon ways, structure, institutions, and funding play a huge role in whether projects are maintained. That this database didn't transform into a website interface like the TASTD is about the institutional support it had (funding, teams, publicity, and more). And, as always, what is an individual case study of a U.S. based Africana related project in this instance, can be seen in ripple effects across Global South DH. Thinking of Create Caribbean which is run by Schuyler Esprit from Dominica an island that was hit hard by the hurricanes this summer. She is still rebuilding. Kelly Baker Josephs also did a fantastic talk at DH + Design in May at Georgia Tech about Digital Caribbean resources that have appeared and disappeared because the support they have is based on one person, scraping together funds and time when they have it, paying for server space out of pocket, etc.

How can the insurgence of fugitivity and the digital also reckon with structures? I have a half though that, as a whole, we need to better appreciate the ephemeral in our digital (which is why I am a huge stan for social media as intellectual production because it is ephemeral) and not see stable (or big) projects with long shelf lives as inherently better than short-term, episodic, or slightly out-dated programming language wise projects who may be doing even more cutting edge work. But I would love your thoughts.....

I don't want to be the great defender of dehumanization through spreadsheets here, but I do want to try to think about the ethic behind these databases, specifically the sheer amount of effort that went into their production. @Jeremydouglass once told me that one of the best interventions CCS can make is to help people see the humans in the code. So following upon the work we've been doing with Margaret Hamilton and the code for Apollo 11, and the work we've begun on Gwendolyn Midlo Hall's database, as we seek the humans encoded in (obscured by?) this database, let us also seek the humans who worked on the codes that helped develop these research tools. As I was reflecting on how to read the Estimates spreadsheet, I found one programmer who worked on the Transatlantic Slave Trade Database.

I am also pursuing our other current of inquiry, trying to explore methods of critically reading the functions found in spreadsheets.

At risk of obscuring others, I want to reflect on the work of Paul Lachance, who came to this project long after Philip Curtin and Herbert Klein and their punchcards and after Hall's work on her database in the 1990s. In the Acknowledgements of Extending the Frontiers: Essays on the New Transatlantic Slave Trade Database, David Eltis writes,

I haven't yet found much about Paul Lachance or his background....

So to reflect on this database as code, we might also reflect on Lachance's methods. In "Estimates of the Size and Direction of Transatlantic Slave Trade," David Eltis and Paul Lachance offer an account of their methods of recovery:

To examine a little closer, I am again looking at the sheet of Estimates that we've made available here.

In that document, the index page lists some useful abbreviations:

Country spreadsheets have breakdowns that look like this:

(I offer these details as a way of inviting us to reflect on some other code in this spreadsheet.)

These estimates have sought to recover a record of scope, deeply rooted in numbers, but drawn from multiple sets of record (again reflecting an ethic of care.) Assuming constructing a more accurate portrait of the scope and flow and humans traded is useful to us, this endeavor offers another portrait of critical intervention through code.

So, while I appreciate the critique of the dehumanizing effects of numerical data representation, the problem of the unnatural disordering of humanity in their source documents, and the call for new kinds of databases that try to preserve that humanity, I think Critical Code Studies, through its reflection on Hall and Lachance, can also offer a sense of the human researchers working on and around the database -- working with an ethic of care to understand the scope of this brutal passage in world history.

It's surely in part due to my background, but I'm very interested in this failure point, "the impossible data the code can't formulate on its own." Like my music example in the other thread, it points to intractable assumptions about who or what counts that get built in.

And I don't think that there's a way around it--if you don't simplify the data, the map is as big as the territory, and that simplification absolutely enables things that would otherwise be impossible. But I do think asking about affordances and limitations--what does it let us do and does it foreclose?--is key for any encoding.